Page 75 - DCAP603_DATAWARE_HOUSING_AND_DATAMINING

P. 75

Unit 4: Data Mining Classification

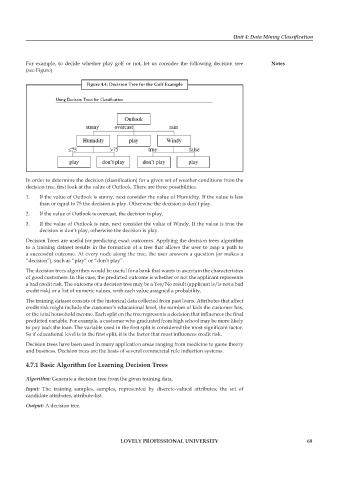

For example, to decide whether play golf or not, let us consider the following decision tree notes

(see Figure)

figure 4.4: Decision tree for the golf example

In order to determine the decision (classification) for a given set of weather conditions from the

decision tree, first look at the value of Outlook. There are three possibilities.

1. If the value of Outlook is sunny, next consider the value of Humidity. If the value is less

than or equal to 75 the decision is play. Otherwise the decision is don’t play.

2. If the value of Outlook is overcast, the decision is play.

3. If the value of Outlook is rain, next consider the value of Windy. If the value is true the

decision is don’t play, otherwise the decision is play.

Decision Trees are useful for predicting exact outcomes. Applying the decision trees algorithm

to a training dataset results in the formation of a tree that allows the user to map a path to

a successful outcome. At every node along the tree, the user answers a question (or makes a

“decision”), such as “play” or “don’t play”.

The decision trees algorithm would be useful for a bank that wants to ascertain the characteristics

of good customers. In this case, the predicted outcome is whether or not the applicant represents

a bad credit risk. The outcome of a decision tree may be a Yes/No result (applicant is/is not a bad

credit risk) or a list of numeric values, with each value assigned a probability.

The training dataset consists of the historical data collected from past loans. Attributes that affect

credit risk might include the customer’s educational level, the number of kids the customer has,

or the total household income. Each split on the tree represents a decision that influences the final

predicted variable. For example, a customer who graduated from high school may be more likely

to pay back the loan. The variable used in the first split is considered the most significant factor.

So if educational level is in the first split, it is the factor that most influences credit risk.

Decision trees have been used in many application areas ranging from medicine to game theory

and business. Decision trees are the basis of several commercial rule induction systems.

4.7.1 Basic algorithm for Learning Decision trees

Algorithm: Generate a decision tree from the given training data.

Input: The training samples, samples, represented by discrete-valued attributes; the set of

candidate attributes, attribute-list.

Output: A decision tree.

LoveLy professionaL university 69