Page 168 - DCAP208_Management Support Systems

P. 168

Unit 10: Data Mining Tools and Techniques

Notes

Table 10.2: Some of the Differences between the Nearest-Neighbor Data Mining

Technique and Clustering

Nearest Neighbor Clustering

Used for prediction as well as Used mostly for consolidating data into a high-level

consolidation. view and general grouping of records into like

behaviors.

Space is defined by the problem to be Space is defined as default n-dimensional space, or

solved (supervised learning). is defined by the user, or is a predefined space

driven by past experience (unsupervised learning).

Generally only uses distance metrics to Can use other metrics besides distance to determine

determine nearness. nearness of two records - for example linking two

points together.

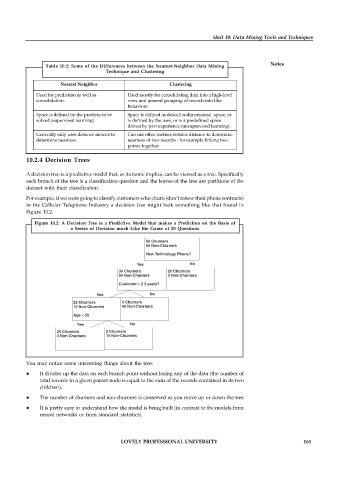

10.2.4 Decision Trees

A decision tree is a predictive model that, as its name implies, can be viewed as a tree. Specifically

each branch of the tree is a classification question and the leaves of the tree are partitions of the

dataset with their classification.

For example, if we were going to classify customers who churn (don’t renew their phone contracts)

in the Cellular Telephone Industry a decision tree might look something like that found in

Figure 10.2.

Figure 10.2: A Decision Tree is a Predictive Model that makes a Prediction on the Basis of

a Series of Decision much Like the Game of 20 Questions

50 Churners

50 Non-Churners

New Technology Phone?

Yes No

30 Churners 20 Churners

50 Non-Churners 0 Non-Churners

Customer < 2.3 years?

Yes No

25 Churners 5 Churners

10 Non-Churners 40 Non-Churners

Age < 55

Yes No

20 Churners 5 Churners

0 Non-Churners 10 Non-Churners

You may notice some interesting things about the tree:

It divides up the data on each branch point without losing any of the data (the number of

total records in a given parent node is equal to the sum of the records contained in its two

children).

The number of churners and non-churners is conserved as you move up or down the tree

It is pretty easy to understand how the model is being built (in contrast to the models from

neural networks or from standard statistics).

LOVELY PROFESSIONAL UNIVERSITY 161